A few well-publicized recent papers have tempered the hype surrounding deep learning. The papers identify both that images can be subtly altered to induce misclassification and that seemingly random garbage images can easily be generated which receive high confidence classifications. A wave of press has sensationalized the message. Several blog posts, a YouTube video, and others have amplified and occasionally distorted the results, professing the gullibility of deep networks.

Given the hoopla, it's appropriate to examine these findings. While some are intriguing, others are less surprising. Some are nearly universal problems with machine learning in adversarial settings. Further, after examining these findings, it seems clear that they are only shocking given an unrealistic conception of what deep networks actually do, i.e., an unrealistic expectation that modern feed-forward neural networks exhibit human-like cognition.

The criticisms are two-fold, stemming from two separate papers. The first,"Intriguing Properties of Neural Networks", is a paper published last year by Google's Christian Szegedy and others. In it, they reveal that one can subtly alter images in ways imperceptible to humans and yet induce misclassification by a trained convolutional neural network (CNN).

To be clear, this paper is well-written, well-conceived, and presents modest but insightful claims. The authors present two results. The main result shows that random linear combinations of the hidden units in the final layer of a neural network are semantically indistinguishable from the units themselves. They suggest that the space spanned by these hidden units is actually what is important and not which specific basis spans that space.

The second, more widely covered claim regards the altering of images. The authors non-randomly alter a set of pixels so as to induce misclassification. The result is a seemingly identical but misclassified image. This finding raises interesting questions about the applications of deep learning to adversarial situations. The authors also point out that this challenges the smoothness assumption, i.e., that examples very close to each other should have the same classification with high probability.

The adversarial case is definitely worth thinking about. Still, optimizing images for misclassification requires access to the model. This scenario may not always be realistic. A spammer, for example may be able to send out emails and see which emails are classified as spam by Google's filter, but they're unlikely to gain the opportunity to access Google's spam-filtering algorithm so as to optimize maximally spam-like emails which are nevertheless not filtered. Similarly, to fool deep learning face detection software, one would need access to the underlying convolutional neural net in order to precisely doctor the image.

It's worth noting that nearly all machine learning algorithms are susceptible to adversarial chosen examples. Consider a logistic regression classifier with thousands of features, many of which have non-zero weight. If one such feature is numerical, the value of that feature could be set very high or very low to induce misclassification, without altering any of the other thousands of features. To a human who could only perceive a small subset of the features at a time, this alteration might not be perceptible. Alternatively, if any features had very high weight in the model, they might only need to have their values shifted a small amount to induce misclassification. Similarly, for decision trees, a single binary feature might be switched to direct an example into the wrong partition at the final layer.

This pathological case for neural networks is different in some ways. One crucial difference is that the values taken by pixels are constrained. Still, given nearly any machine learning model with many features and many degrees of freedom, it is easy to engineer pathological adversarial examples. This is true even for much simpler models that are better understood and come with theoretical guarantees. Perhaps, we should not be surprised that deep learning too is susceptible to adversarially chosen examples.

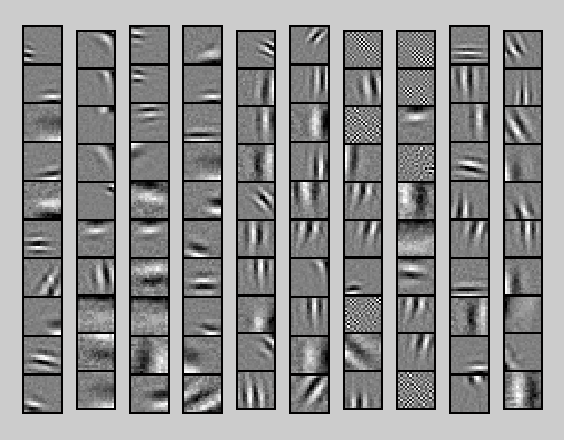

The second paper, "Deep Networks are Easily Fooled" by Anh Nguyen of the University of Wyoming, appears to make a bolder proclamation. Citing the work of Szegedy et. al., they set out to examine the reverse problem, i.e., how to fabricate a seemingly nonsensical example, which despite its apparent lack of content nonetheless receives a high confidence classification. The authors use gradient ascent to train gibberish images (unrecognizable to the human eye) which are classified strongly into some clearly incorrect object class.

From a standpoint of mathematical intuition, this is what we should expect. In the previous case, the altered images were constrained to be indistinguishable from some source image. Here, the images are constrained only not to look like anything! In the whole space of possible images, actual recognizable images are a minuscule subset of images, leaving nearly the entire vector space open. Further, here it is very easy to find a corresponding problem in virtually every other machine learning method. Given any linear classifier, one could find some spot that is both far from the decision boundary and also far away from any other data point that has ever been seen. Given a topic model, one could create a nonsensical mishmash of randomly ordered words which appears to get the same inferred topic distribution as some chosen real document.

The primary sense in which this result might be surprising is that convolutional neural networks' have come to rival human abilities when it comes to the task of object detection. In this sense, it may be important to distinguish the capabilities of CNNs from human abilities. The authors make this point from the outset, and the argument is reasonable.

As both Michael I. Jordan and Geoff Hinton have recently discussed, deep learning's great successes have attracted a wave of hype. The recent wave of negative publicity illustrates that this hype cuts both ways. The success of deep learning has rightfully tempted many to examine its shortcomings. However, it's worth keeping in mind that many of the problems are ubiquitous in most machine learning contexts. Perhaps more widespread interest in algorithms robust to adversarial examples could benefit the entire machine learning community.

Zachary Chase Lipton is a PhD student in the Computer Science Engineering department at the University of California, San Diego. Funded by theDivision of Biomedical Informatics, he is interested in both theoretical foundations and applications of machine learning. In addition to his work at UCSD, he has interned at Microsoft Research Labs.

Zachary Chase Lipton is a PhD student in the Computer Science Engineering department at the University of California, San Diego. Funded by theDivision of Biomedical Informatics, he is interested in both theoretical foundations and applications of machine learning. In addition to his work at UCSD, he has interned at Microsoft Research Labs.

雷达卡

雷达卡

提升卡

提升卡 置顶卡

置顶卡 沉默卡

沉默卡 变色卡

变色卡 抢沙发

抢沙发 千斤顶

千斤顶 显身卡

显身卡

京公网安备 11010802022788号

京公网安备 11010802022788号