MDL Clustering is a free software suite for unsupervised attribute ranking, discretization, and clustering based on the Minimum Description Length principle and built on the Weka Data Mining platform.

By Zdravko Markov, Central Connecticut State University.

MDL Clustering is a free software suite for unsupervised attribute ranking, discretization, and clustering built on the Weka Data Mining platform. It implements learning algorithms as Java classes compiled in a JAR file, which can be downloaded or run directly online provided that the Java runtime environment is installed. It starts the Weka command-line interface (Simple CLI), which provides access to the new algorithms and to the full functionality of the Weka data mining software as well. The data should be provided in the Weka ARFF or CSV format.

The basic idea of the algorithms is to use of the Minimum Description Length (MDL) principle to compute the encoding length (complexity) of a data split, which is used as an evaluation measure to rank attributes, discretize numeric attributes or cluster data. Below is a brief description of the algorithms. More details, evaluations and comparisons can be found in recent papers and a book chapter [1, 2, 3].

- Unsupervised attribute ranking (MDLranker.class). For each attribute the data are split using its values, i.e. a clustering is created such that each cluster contains the instances that have the same value for that attribute. The MDL of this clustering is used as a measure to evaluate the attribute assuming that the best attribute minimizes the corresponding MDL score.

- Unsupervised discretization (MDLdiscretize.class). The numeric attributes are discretized by splitting the range of their values in two intervals, which are determined by a breakpoint that minimizes the MDL of the resulting data split.

- Hierarchical clustering (MDLcluster.class). The algorithm starts with the data split produced by the attribute that minimizes MDL and recursively applies the same procedure to the resulting splits, thus generating a hierarchical clustering tree similar to a decision tree. The process of growing the tree is controlled by a parameter evaluating the information compression at each node, which is computed as the difference between the code length of the data at the current node and the MDL of the attribute that produces the data split at that node. If the compression becomes lower than a specified cutoff value the process of growing the tree stops and a leaf node is created.

The algorithms computational complexity is linear in the number of data instances and quadratic in the total number of different attribute-values in the data. However it is substantially reduced by an efficient implementation using bit-level parallelism. This makes it suitable not only for processing large number of instances, but also for large number of attributes, which is typical in text and web mining.

In addition to the above algorithms the suite also includes a utility for creating data files from text or web documents and a lab project for web document clustering illustrating the basic steps of document collection, creating the vector space model, data preprocessing, clustering and attribute selection.

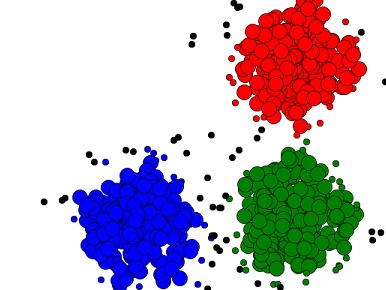

A set of popular benchmark data is provided for experimenting and comparisons. Below are some examples of running the MDL clustering algorithm on a 3-class version of the Reuters data in the Weka command-line interface.

> java MDLcluster data/reuters-3class.arff 280000trade=0 (584469.05) rate=0 (277543.73) [339,18,30] money rate=1 (259602.51) [168,0,177] interesttrade=1 (206999.39) [101,301,12] tradeThe above clusters are explicitly described by attribute values, which makes the clustering models easier to understand and use. The tree has three clusters (exactly as the number of classes) and identifies the two most important attributes in the data. Further, if we are interested in the structure of these clusters we may lower the compression threshold and obtain the following tree, which shows another important attribute (market) that splits the “interest” cluster (rate=1) in two.

> java MDLcluster data/reuters-3class.arff 250000trade=0 (584469.05) rate=0 (277543.73) mln=0 (160192.77) [178,10,28] money mln=1 (129134.46) [161,8,2] money rate=1 (259602.51) market=0 (117196.81) [66,0,117] interest market=1 (107679.77) [102,0,60] moneytrade=1 (206999.39) [101,301,12] tradeThe clustering trees produced by MDLcluster in an unsupervised manner are very similar to the decision trees created by supervised learning algorithms. For example, the following tree is produced by the Weka’s J48 algorithm.

> java weka.classifiers.trees.J48 a€“t data/reuters-3class.arff -M 150trade = 0| rate = 0: money (387.0/48.0)| rate = 1| | market = 0: interest (183.0/66.0)| | market = 1: money (162.0/60.0)trade = 1: trade (414.0/113.0)This similarity indicates that the MDL evaluation measure can identify the important attributes in the data without using class information. A more detailed study [1] shows that the MDL unsupervised attribute ranking performs comparably with the supervised ranking based on information gain (used by the decision tree learning algorithm). Another empirical study [2] show that the MDL clustering algorithm compares favorably with k-means and EM on popular benchmark data and performs particularly well on binary and sparse data (e.g. text and web documents).

References

- Zdravko Markov. MDL-based Unsupervised Attribute Ranking, Proceedings of the 26th International Florida Artificial Intelligence Research Society Conference (FLAIRS-26), St. Pete Beach, Florida, USA, May 22-24, AAAI Press 2013, pp. 444-449.http://www.aaai.org/ocs/index.php/FLAIRS/FLAIRS13/paper/view/5845/6115

- Zdravko Markov. MDL-Based Hierarchical Clustering, Proceedings of the IEEE 14th International Conference on Machine Learning and Applications (ICMLA 2015), December 9-11, 2015, Miami, Florida, USA, pp. 464-467. PDF

- Zdravko Markov and Daniel T. Larose. MDL-Based Model and Feature Evaluation, in Chapter 4 of Data Mining the Web: Uncovering Patterns in Web Content, Structure, and Usage, Wiley, April 2007, ISBN: 978-0-471-66655-4.

Bio: Zdravko Markov is a professor of Computer Science at Central Connecticut State University, where he teaches courses in Programming, Computer Architecture, Artificial Intelligence, Machine Learning, Data and Web Mining. His research area is Artificial Intelligence with a focus on Machine Learning, Data and Web Mining, where he is developing software and a project-based frameworks for teaching core AI topics. Dr. Markov has published 4 books and more than 60 research papers in conference proceedings and journals. His most recent book (co-authored with Daniel Larose) is "Data Mining The Web: Uncovering Patterns in Web Content, Structure, and Usage", published by Wiley in 2007.

Bio: Zdravko Markov is a professor of Computer Science at Central Connecticut State University, where he teaches courses in Programming, Computer Architecture, Artificial Intelligence, Machine Learning, Data and Web Mining. His research area is Artificial Intelligence with a focus on Machine Learning, Data and Web Mining, where he is developing software and a project-based frameworks for teaching core AI topics. Dr. Markov has published 4 books and more than 60 research papers in conference proceedings and journals. His most recent book (co-authored with Daniel Larose) is "Data Mining The Web: Uncovering Patterns in Web Content, Structure, and Usage", published by Wiley in 2007.

雷达卡

雷达卡

提升卡

提升卡 置顶卡

置顶卡 沉默卡

沉默卡 变色卡

变色卡 抢沙发

抢沙发 千斤顶

千斤顶 显身卡

显身卡

京公网安备 11010802022788号

京公网安备 11010802022788号