@JennyBryan posted her slides from the 2015 R Summit and they are a must-read for instructors and even general stats/R-folk. She’s one of the foremost experts in R+GitHub and her personal and class workflows provide solid patterns worth emulation.

One thing she has mentioned a few times—and included in her R Summit talk—is the idea that you can lean on GitHub when official examples of a function are “kind of thin”. She uses a search for vapply as an example, showing how to search for uses of vapply in CRAN(there’s a read-only CRAN mirror on GitHub) and in GitHub R code in general.

I remember throwing together a small function to kick up a browser from R for those URLs (in a response to one of her tweets), but realized this morning (after reading her slides last night) that it’s possible to not leave RStudio to get these GitHub search results (or, at least the first page of results). So, I threw together this gist which, when sourced, provides aghelp function. This is the code:

| ghelp <- function(topic, in_cran=TRUE) { require(htmltools) # for getting HTML to the viewer require(rvest) # for scraping & munging HTML # github search URL base base_ext_url <- "https://github.com/search?utf8=%%E2%%9C%%93&q=%s+extension%%3AR" ext_url <- sprintf(base_ext_url, topic) # if searching with user:cran (the default) add that to the URL if (in_cran) ext_url <- paste(ext_url, "+user%3Acran", sep="", collapse="") # at the time of writing, "rvest" and "xml2" are undergoing some changes, so # accommodate those of us who are on the bleeding edge of the hadleyverse # either way, we are just extracting out the results <div> for viewing in # the viewer pane (it works in plain ol' R, too) if (packageVersion("rvest") < "0.2.0.9000") { require(XML) pg <- html(ext_url) res_div <- paste(capture.output(html_node(pg, "div#code_search_results")), collapse="") } else { require(xml2) pg <- read_html(ext_url) res_div <- as.character(html_nodes(pg, "div#code_search_results")) } # clean up the HTML a bit res_div <- gsub('How are these search results\? <a href="/contact">Tell us!</a>', '', res_div) # include a link to the results at the top of the viewer res_div <- gsub('href="/', 'href="http://github.com/', res_div) # build the viewer page, getting CSS from github-proper and hiding some cruft for_view <- sprintf('<html><head><link crossorigin="anonymous" href="https://assets-cdn.github.com/assets/github/index-4157068649cead58a7dd42dc9c0f2dc5b01bcc77921bc077b357e48be23aa237.css" media="all" rel="stylesheet" /><style>body{padding:20px}</style></head><body><a href="%s">Show on GitHub</a><hr noshade size=1/>%s</body></html>', ext_url, res_div) # this makes it show in the viewer (or browser if you're using plain R) html_print(HTML(for_view)) } |

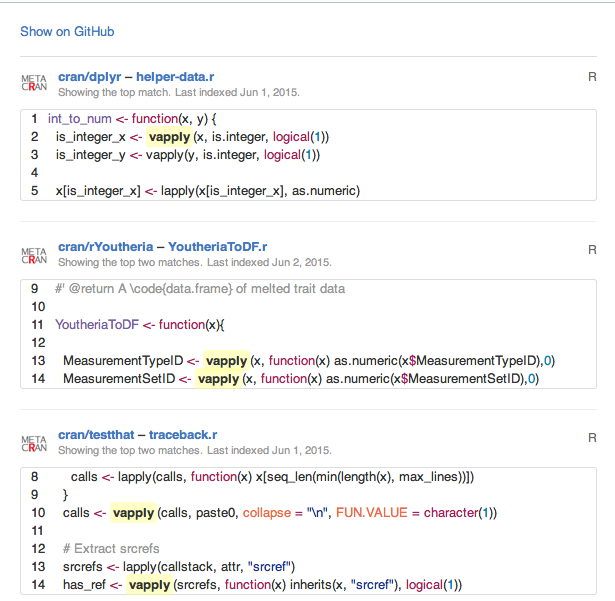

Now, when you type ghelp("vapply"), you’ll get:

in the viewer pane (and similar with ghelp("vapply", in_cran=FALSE)). Clicking the top link will take you to the search results page on GitHub (in your default web browser), and all the other links will pop out to a browser as well.

If you’re the trusting type, you can devtools::source_gist('32e9c140129d7d51db52') or just add this to your R startup functions (or add it to your personal helper package).

There’s definitely room for some CSS hacking and it would be fairly straightforward to getall the search results into the viewer by following the pagination links and stitching them all together (an exercise left to the reader).

To leave a comment for the author, please follow the link and comment on his blog: rud.is » R.

R-bloggers.com offers daily e-mail updates about R news and tutorials on topics such as: visualization (ggplot2, Boxplots, maps, animation), programming (RStudio, Sweave,LaTeX, SQL, Eclipse, git, hadoop, Web Scraping) statistics (regression, PCA, time series,trading) and more...

雷达卡

雷达卡

京公网安备 11010802022788号

京公网安备 11010802022788号