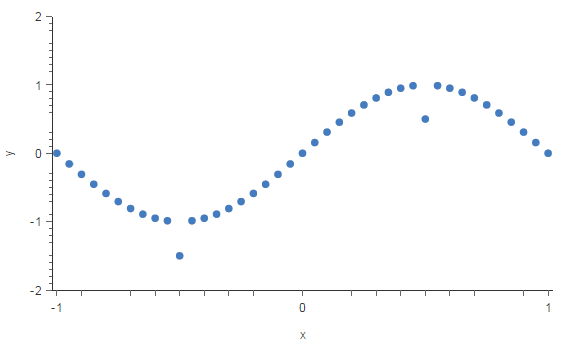

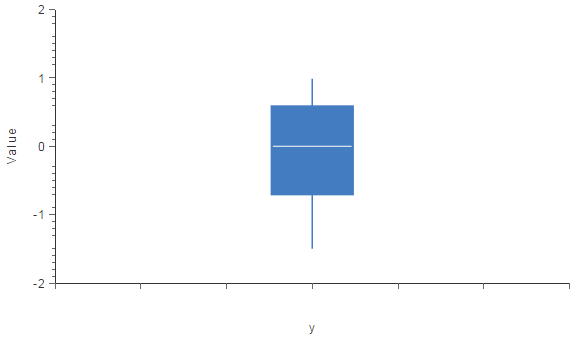

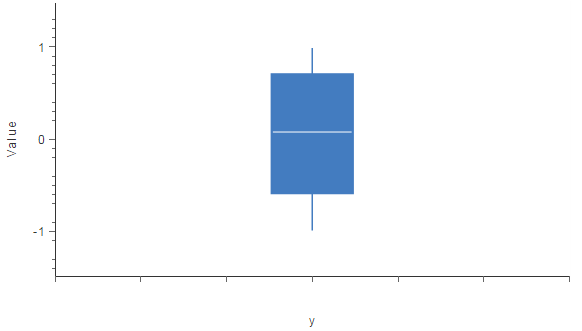

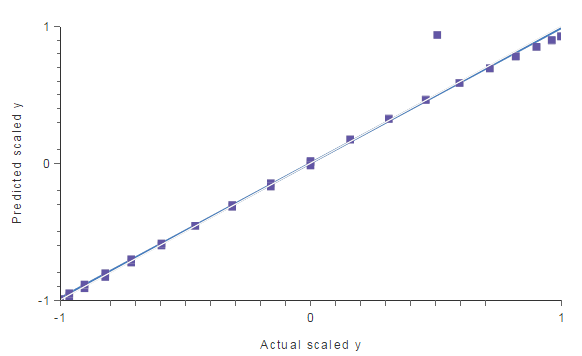

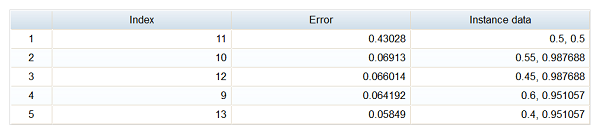

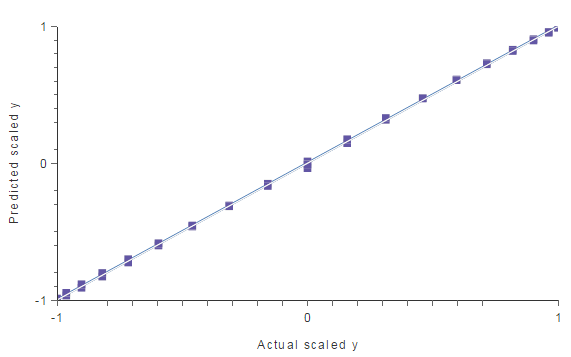

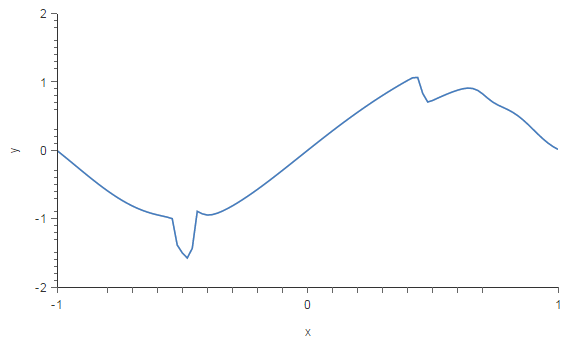

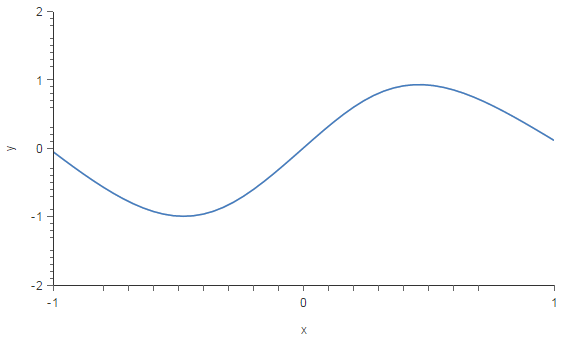

An outlier is a data point that is distant from other similar points. They may be due to variability in the measurement or may indicate experimental errors. If possible, outliers should be excluded from the data set. However, detecting that anomalous instances might be very difficult, and is not always possible. Machine learning algorithms are very sensitive to the range and distribution of attribute values. Data outliers can spoil and mislead the training process resulting in longer training times, less accurate models and ultimately poorer results. Along this article, we are going to talk about 3 different methods of dealing with outliers: To illustrate that methods, we will use a data set obtained from the following function. y = sin(π·x) Once we have our data set, we replace two y values for other ones that are far from our function. The next graph depicts this data set. The points A=(-0.5,-1.5) and B=(0.5,0.5) are outliers. Point A is outside the range defined by the y data, while Point B is inside that range. As we will see, that makes them of different nature, and we will need different methods to detect and treat them. One of the simplest methods for detecting outliers is the use of box plots. A box plot is a graphical display for describing the distribution of the data. Box plots use the median and the lower and upper quartiles. The Tukey’s method defines an outlier as those values of the data set that fall far from the central point, the median. The maximum distance to the center of the data that is going to be allowed is called the cleaning parameter. Id the cleaning parameter is very large, the test becomes less sensitive to outliers. On the contrary, if it is too small, a lot of values will be detected as outliers. The following chart shows the box plot for the variable y. The minimum of the variable is -1.5, the first quartile is -0.707, the second quartile or median is 0, the third quartile is 0.588 and the maximum is 0.988. As we can see, the minimum is far away from the first quartile and the median. If we set the cleaning parameter to 0.6, the Tukey’s method will detect Point A as an outlier, and clean it from the data set. Plotting again the box plot for that variable, we can notice that the outlier has been removed. As a consequence, the distribution of the data is now much better. Now, the minimum of y is -0.9858, the first quartile is -0.588, the second quartile or median is 0.078, the third quartile is 0.707 and the maximum is 0.988. However, this univariate method has not detected Point B, and therefore we are not finished. Outliers do not need to be extreme values. Therefore, as we have seen with Point B, the univariate method does not always work well. The multivariate method tries to solve that by building a model using all the data available, and then cleaning those instances with errors above a given value. In this case, we have trained a neural network using all the available data (but Point B, which was excluded by the univariate method). Once we have our predictive model, we perform a linear regression analysis in order to obtain the next graph. The predicted values are plotted versus the actual ones as squares. The coloured line indicates the best linear fit. The grey line would indicate a perfect fit. As we can see, there is a point that falls too far from the model. This point is spoiling the model, so we can think that it is another outlier. To find that point quantitatively, we can calculate the maximum errors between the outputs from the model and the targets in the data. The following table lists the 5 instances with maximum errors. We can notice that instance 11 stands out for having a large error in comparison with the others (0.430 versus 0.069,…). If we look at the linear regression graph, we can see that this instance matches the point that is far away from the model. If we select 20% of maximum error, this method identifies Point B as an outlier and cleans it from the data set. We can see that by performing again a linear regression analysis. There are no more outliers in our data set so the generalization capabilities of our model will improve notably. Now, we are going to talk about a different method for dealing with outliers. Unlike the univariate and multivariate methods, it doesn’t detect and clean the outliers. Instead, it reduces the impact that outliers will have in the model. The Minkowski error is a loss index that is more insensitive to outliers than the standard sum squared error. The sum squared error raises each instance error to the square, making a too big contribution of outliers to the total error. The Minkowski error solves that by raising each instance error to a number smaller than 2, for instance 1.5. This reduces the contribution of outliers to the total error. For instance, if an outlier has an error of 10, the squared error for that instance will be 100, while the Minkowski error will be 31.62. To illustrate this method, we are going to build two different neural network models from our data set contaning two outliers (A and B). The architecture selected for this network is 1:24:1. The first one will be created with the sum squared error, and the second one with the Minkowski error. The model trained with sum squared error is plotted in the next figure. As we can see, two outliers are spoiling the model. Now, we are going to train the same neural network with the Minkowski error. The resulting model is depicted next. As we can see, the Minkowski error has made the training process more insensitive to outliers than the sum squared error. As a result, Minkowski error has improved the quality of our model notably. We have seen that outliers are one of the main problems when building a predictive model. Indeed, they cause data scientists to achieve poorer results than they could. To solve that, we need effective methods deal with that spurious points and remove them. In this article, we have seen 3 different methods for dealing with outliers: the univariate method, the multivariate method and the Minkowski error. These methods are complementary and, if our data set has many and difficult outliers, we might need to try them all.本帖隐藏的内容

雷达卡

雷达卡

京公网安备 11010802022788号

京公网安备 11010802022788号