Client1--HDFS Operations

Prerequisite: Ensure that Hadoop is installed and the environment is configured. If it's a distributed cluster, make sure passwordless SSH login between nodes is set up. All operations should be performed with a user who has Hadoop operation permissions.

1. Cluster Management

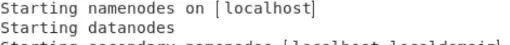

To start the Hadoop cluster, execute the following command:

$HADOOP_HOME/sbin/start-dfs.sh

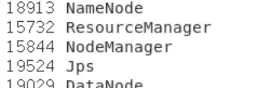

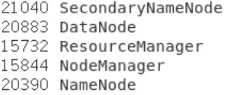

To verify if the HDFS processes have started successfully, use the command:

jps

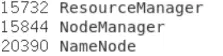

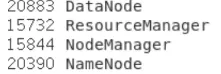

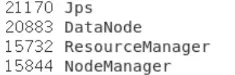

To shut down the HDFS cluster and verify using jps, run:

$HADOOP_HOME/sbin/stop-dfs.sh

2. Controlling Individual Processes

For managing individual services within the Hadoop cluster, you can use the following commands:

- To start the NameNode:

$HADOOP_HOME/sbin/hadoop-daemon.sh start namenode

$HADOOP_HOME/sbin/hadoop-daemon.sh status datanode

$HADOOP_HOME/sbin/hadoop-daemon.sh stop secondarynamenode

hdfs --daemon start namenode

hdfs --daemon status datanode

hdfs --daemon stop secondarynamenode

3. Core File System Operations

Create directories in HDFS as follows:

- Create a top-level directory (will error if parent directory does not exist):

hadoop fs -mkdir /test_dir

hadoop fs -mkdir -p /hdfs_demo/input

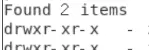

To verify the creation of directories, use:

hadoop fs -ls /

4. Uploading Local Files to HDFS

First, create a test file on your local system:

echo "Hello HDFS" > local_test.txt

Then, upload the local file to the HDFS directory /hdfs_demo/input:

hadoop fs -put local_test.txt /hdfs_demo/input/

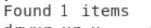

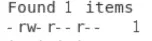

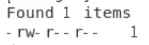

To verify the upload, list the files in the directory:

hadoop fs -ls /hdfs_demo/input

5. Viewing Content of HDFS Files

To view the content of a file stored in HDFS:

hadoop fs -cat /hdfs_demo/input/local_test.txt

6. Appending Content to HDFS Files

Create a file with the content to append:

echo "Append this line" > append_content.txt

Append the content to an existing HDFS file:

hadoop fs -appendToFile append_content.txt /hdfs_demo/input/local_test.txt

To verify the appended content, use:

hadoop fs -cat /hdfs_demo/input/local_test.txt

7. Copying Files Within HDFS

To copy a file within HDFS, use the following commands:

- Copy a file without changing its name:

hadoop fs -cp /hdfs_demo/input/local_test.txt /test_dir/

hadoop fs -cp /hdfs_demo/input/local_test.txt /test_dir/copied_file.txt

To verify the operation, list the contents of the target directory:

hadoop fs -ls /test_dir

8. Moving Files in HDFS

To move files within HDFS, use the following commands:

- Move a file to a different directory without changing its name:

hadoop fs -mv /test_dir/copied_file.txt /hdfs_demo/

hadoop fs -mv /hdfs_demo/input/local_test.txt /hdfs_demo/input/renamed_test.txt

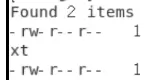

To verify the move and rename operations, list the contents of the directories:

hadoop fs -ls /hdfs_demo

hadoop fs -ls /hdfs_demo/input

9. Downloading Files from HDFS to Local

To download a file from HDFS to your local system, use the following command:

hadoop fs -get /hdfs_demo/input/renamed_test.txt ./

下载并重命名文件(下载至本地并重命名)

使用以下命令从HDFS下载文件并重命名为downloaded_file.txt:

hadoop fs -get /hdfs_demo/input/renamed_test.txt ./downloaded_file.txt验证:检查本地文件是否存在

ls -l ./renamed_test.txt ./downloaded_file.txtcat ./downloaded_file.txt

从HDFS中移除文件或目录

使用以下命令从HDFS中删除文件或目录:

- 删除单一文件:

hadoop fs -rm /hdfs_demo/copied_file.txt - 删除空目录(如果目录非空则会出错):

hadoop fs -rmdir /test_dir(需确保/test_dir中没有文件) - 强制删除非空目录及其所有内容(

-r表示递归删除,-f表示不提示确认):hadoop fs -rm -r -f /hdfs_demo/input

验证:检查根目录,确认文件或目录已被删除

hadoop fs -ls /

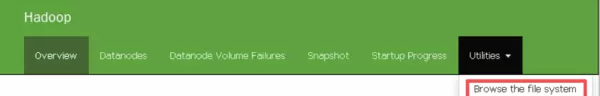

通过客户端2访问HDFS的Web界面(9870端口)

- 访问Web用户界面:对于Hadoop 3.x及以上版本,可以在浏览器中输入相应地址。

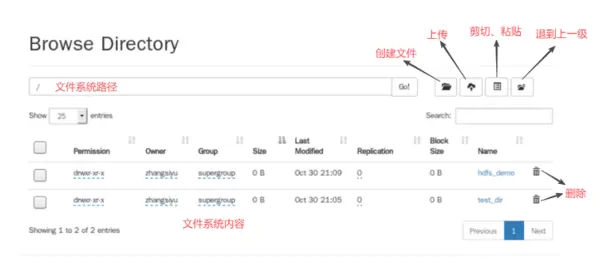

- 页面操作:登录Web界面后,通过顶部菜单导航至文件管理区域。

在文件浏览页面,您可以执行以下操作:

- 创建文件夹:点击“新建文件夹”图标,输入名称即可。

- 上传文件:点击“上传文件”图标,选择本地文件上传。

- 删除文件/目录:选择要删除的目标文件或目录,点击“删除”按钮。

- 返回上一级目录:点击“返回”图标。

http://<namenode-ip>:9870http://192.168.142.131:9870UtilitiesBrowse the file system

客户端3——Java操作

- 解压Hadoop:下载Hadoop安装包,将其解压到本地指定路径。如果解压过程中需要管理员权限,请按住相关键并右键选择“以管理员身份解压”。

- 配置系统环境变量:创建一个系统变量,其值指向Hadoop安装目录。同时,在现有系统变量中添加Hadoop的bin路径。

- 下载并放置依赖文件:获取必要的依赖文件,并将它们放置在正确的位置。

- 安装Big Data Tools插件:在IDEA中依次点击“File”->“Settings”->“Plugins”,搜索“Big Data Tools”并安装,之后重启IDEA。

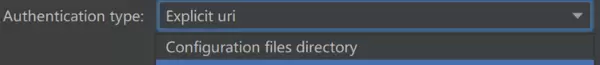

- 配置HDFS连接:可以通过配置文件或直接在代码中指定URI来设置HDFS连接。

hadoop-3.3.6.tar.gzG:\Edge\hadoop-3.3.6ShiftHADOOP_HOMEG:\Edge\hadoop-3.3.6;Path%HADOOP_HOME%\binhadoop.dllwinutils.exe。2.IDEA插件安装与配置

Configuration files directory或者Explicit uri来连接

雷达卡

雷达卡

京公网安备 11010802022788号

京公网安备 11010802022788号