A reasoned discussion of why the next generation of data efficient learning approaches rely on us developing new algorithms that can propagate stochasticity or uncertainty right through the model, and which are mathematically more involved than the standard approaches.

By Neil Lawrence, University of Sheffield.

It seems it may only be a matter of time before the best Go player on the planet is a computer 1. AlphaGo beat the European champion in Go and was driven by machine learning, a technology that has underpinned the recent major advances in artificial intelligence in computer vision, speech recognition and language translation.

(Editor: this post was written in March 2016, shortly before AlphaGo beat world's top Go player Lee Se-dol)

Machine learning is a data driven approach to artificial intelligence. AlphaGo learnt how to play Go by many games played against itself, and by observing a large history of games played by professional players.

The end result is that by the time of its first match against the European Champion AlphaGo had already played many more games of Go than any human could possibly play in their lifetime. And since that win AlphaGo has been actively learning to improve itself. Relentlessly playing all day and all night in an effort to ready itself to play the world champion.

Our Data Delusion

This phenomenon isn’t restricted to AlphaGo. Our human-level performance in other domains is also driven by an unearthly amount of data. Our vision systems see way more labeled images than we require to recognize objects, our speech systems require many more words than we do to understand words. Our translation systems require many more examples of translated text than a human could read.

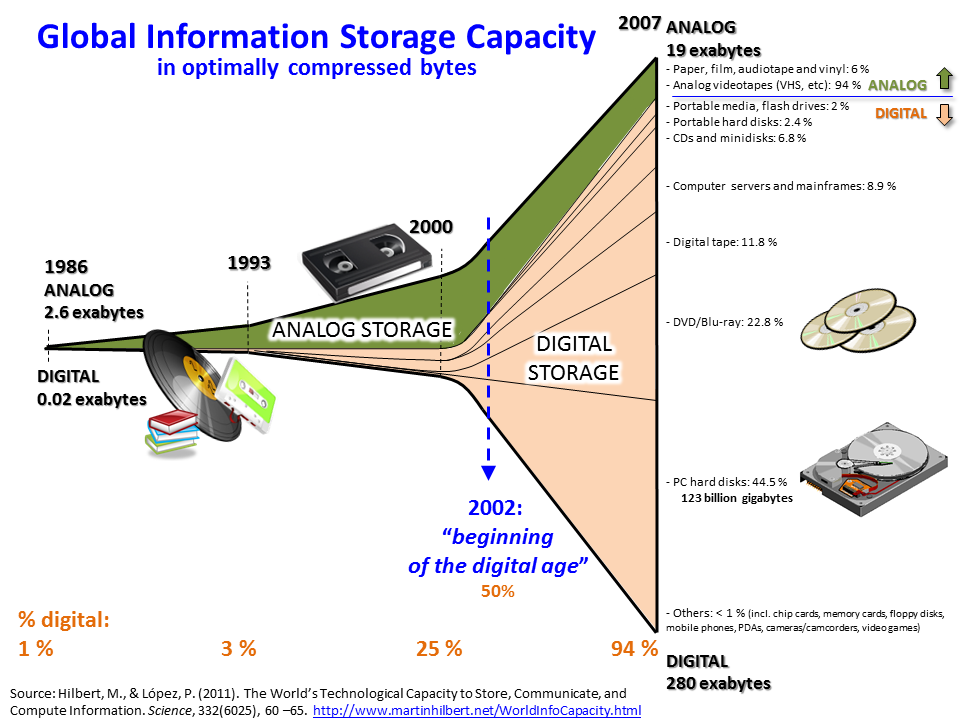

So while we are making considerable progress on tasks which were once thought extremely difficult or impossible, the truth is that the progress is driven far more by the availability of data than an improvement in algorithms. Indeed, when we first tried to address tasks such as object recognition and language translation they would have been impossible if we had tried to apply state of the art methodologies to the data we had then. It is the explosion of data that has rendered them tractable.

Steam

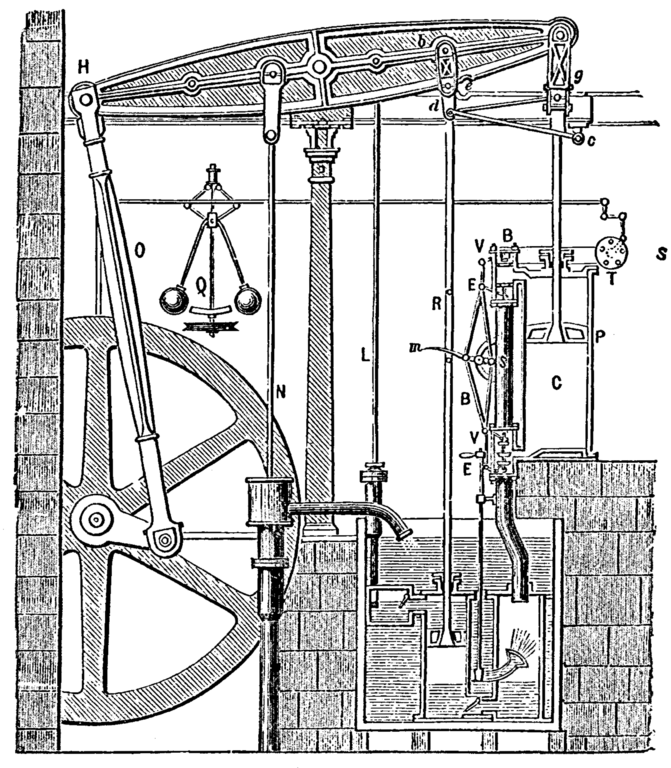

The situation reminds me greatly of the early stage of the industrial revolution. Thomas Newcomen was born in the South West of England, an area well known, since Roman times, for tin mining. Mines need pumping out to keep water at bay. And in 1712 Newcomen invented the steam engine for doing that. His engine consisted of a large piston sitting on top of a boiler. The piston was alternatively filled with steam by the boiler, and then cooled through direct injection of water causing motion.

As it happened, Newcomen’s engine had relatively little effect at his local tin mines. They were so inefficient, that they were impractical. They were widely used but in coalfields, where they could be easily fueled.

This brings me to mind somewhat of the situation today: the major internet companies can profit from our current generation of inference engines because they are equivalent to the coalfields of yesteryear. They have enormous quantities of data readily available.

Medical Applications

The equivalent of the tin mines, and any other mine, is currently missing out. In application domains such as medicine we face challenges because firstly: the complexity of the system is much greater than speech, vision or even the game of Go. Our interventions are often at a biochemical level, and yet their manifestations occur at the global level of our health.

For rare or complex diseases: those that have causes driven by a combination of environmental and genetic causes, with the current set of inefficient models we will never have sufficient data for these complex models to learn what we need to know to diagnose early and deliver the cures we need.

Separate Condenser

The steam engine is far more associated in our minds with the name of James Watt, Watt made the steam engine practical by introducing the separate condenser. Rather than directly injecting water into the cylinder he sucked the steam out of the cylinder and cooled it separately.

This was more efficient as the cylinder itself no longer had to go through cycles of heating and cooling. The resulting doubling in efficiency made the steam engine practical, not just for Cornish tin mines, but for railways and traction engines.

Intelligence

My definition of intelligence is the use of information to save energy.3

Intelligent decision making implies that we assimilate the facts and make a decision that reduces our expenditure relative to actions we would have taken had we not had those facts. Under that definition, we can become more intelligent by either saving more energy with our resulting decisions, or by using less information.

In a very real sense there is currently a data efficiency deficit, just as large as the efficiency deficit present in Newcomen’s engine. Machine learning needs its separate condenser moment. What we require is a revolution in data efficiency equivalent to Watt’s separate condenser moment.

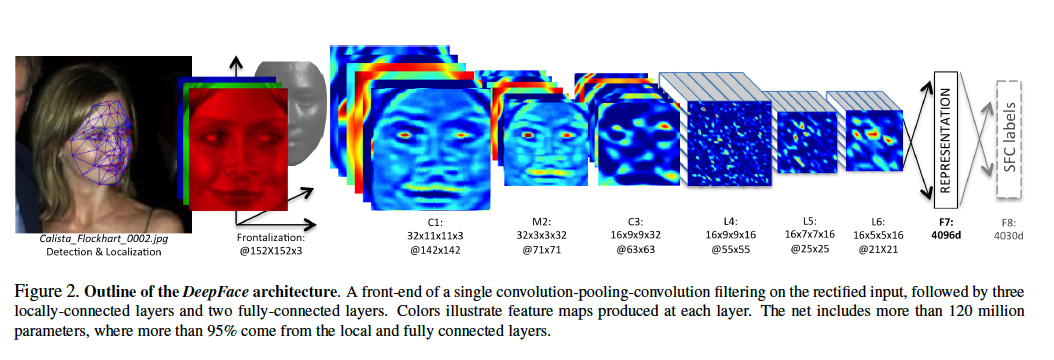

Function Composition and Deep LearningArchitecture of DeepFace, Facebook’s recognition system from their paper. See footnote for link.4In mathematics we can sometimes think of a function as a deterministic process. So we can think of deep learning as a combination of processes. Each individual process is composed together to create a more complex process. Presentation of data to the process allows us to apply a set of transformations to reach a result. Above is an example from Facebook’s DeepFace algorithm, the aim is to classify whether or not this is Calista Flockhart’s face.

In machine learning this is known as deep learning. For some authors it is related to the brain or a fundamental way of thinking about AI, but we can simply think of it as a sensible idea of applying a set of simple transformations to an image to built a complex transformation.

The challenge of machine learning is how to determine what these transformations should be. Each of the simpler deterministic transformations can actually have very many parameters. In the case of DeepFace there are more than 120 million parameters overall.

When we think of deep learning, we can think of any given data point passing through the deterministic processes like a ball falling through an early pinball machine (or a Pachinko machine)5. Each layer of pins is equivalent to a layer in the deep model.

The objective of machine learning, is to reconstruct the configuration of those pins in such a way that Calista’s face is correctly detected. Think of the face as a set of initial conditions, and the face passes through each process to emerge in modified form. The task of inference is to ensure that the right set of initial conditions lead to the right conclusion.

Our deep model is like a Pachinko machine, but one where we have control over the location of the pins. The objective in deep learning is to move the pins around in such a way that for the right set of initial conditions (as given by the image in our analogy), the correct result is achieved.

The parameters of the model are sometimes known as network weights. They are like the positions of the pins. So we can think of DeepFace having 120 million pins in their Pachinko machine.

We can think of the what the network ‘thinks’ about a particular pattern as being the left-right position of the ball as it falls through the network. Of course in reality, at each layer of pins this ‘thought’ is only one value. It’s one dimensional. Real deep networks have many many dimensions, so it’s like a Pachinko machine where the pins are in a high dimensional space, normally called a hyper-space. This means the networks ‘thoughts’ are higher dimension and more complex.

雷达卡

雷达卡

京公网安备 11010802022788号

京公网安备 11010802022788号