What is the interaction between Topological Data Analysis and Machine Learning ? A case study shows how TDA decomposition of the data space provides useful features for improving Machine Learning results.

By Harlan Sexton (Ayasdi).People new to topological data analysis (TDA) often ask me some form of the question, "What’s the difference between Machine Learning and TDA?" It's a hard question to answer, in part because it depends on what you mean by Machine Learning (ML).

Wikipedia has this to say about ML:

"Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. Such algorithms operate by building a model from example inputs in order to make data-driven predictions or decisions, rather than following strictly static program instructions."

At this level of generality one could argue that TDA is a form of ML, but I think most people who work in both of these areas would disagree.

Concrete examples of ML are rather more similar to one another than they are any example of TDA. The same can be said for TDA where examples look more like TDA than ML.

In order to explain the differences between TDA and ML, and perhaps more importantly demonstrate how and why TDA and ML play well together, I’ll make two overly simple definitions and then I’ll illustrate the point with a real example.

One simple way to define ML would be any method that posits a parameterized model of data and learns the parameters from the data.

In the same vein we can define TDA as any method that uses as its model of data only a notion of 'similarity' between data points.

In this view, ML models are much more specific and detailed, and the success of the model depends on how well it fits the underlying data. The advantage is that when the data fits the model the results can be quite remarkable - apparent chaos is replaced by near perfect understanding.

The advantage for TDA is generality.

With TDA, any notion of similarity is enough to use the methodology. Conversely, with ML you need a strong notion of similarity (and more) to make any progress with any method at all.

For example, from a long list of first names it would be impossible to reliably predict height and weight. You need more.

A key element here is that algorithms derived from topology tend to be very, very forgiving of small errors - even if your notion of similarity is somehow flawed, as long as it is 'sort of right' TDA methods will usually yield something useful.

The generality of TDA methods has another advantage over ML techniques that remain in effect even when an ML method is a good fit - namely ML methods often create detailed internal state which generate notions of similarity, making it possible to couple TDA with ML to get even more insight into a data set.

This all sounds great, but it's general enough (or if you’re feeling less charitable, vague enough) it might mean anything.

So let’s be specific.

A Random ForestTM classifier is an ensemble learning method that operates by constructing a multitude of decision trees during training and using 'majority rule' on that 'forest' (collection of decision trees) to classify non-training data.

While the details of the construction are quite interesting and clever, they aren't germane at the moment. All you need to recall about a Random Forest is that it operates on each data point by applying a collection of decision trees to the datum and returning a sequence of 'leaf nodes' (the leaf in the decision tree to which the input 'fell').

In normal operation each leaf in each tree has a class C associated with it, which is interpreted as "by the time a datum gets to this node in the tree I know it is overwhelmingly likely to be of class C." The Random Forest classifies by counting the 'leaf node class votes' from each tree and picking a winner. While highly effective on a broad range of data types, this approach drops a great deal of information on the floor.

If all you care about is the best guess for the class of a datum, then you don’t want to see that extra information, but sometimes you want more. This "extraneous" information can be made into a distance function by defining the distance between two data points as the number of times their respective 'leaf nodes' differed.

The distance function between two data points is a perfectly good metric (it is, in fact, the Hamming metric on a transformation of the data set), and as such we can apply TDA to it.

As an example, let us look at a random 5,000 point sample drawn from:

https://archive.ics.uci.edu/ml/datasets/Dataset+for+Sensorless+Drive+Diagnosis

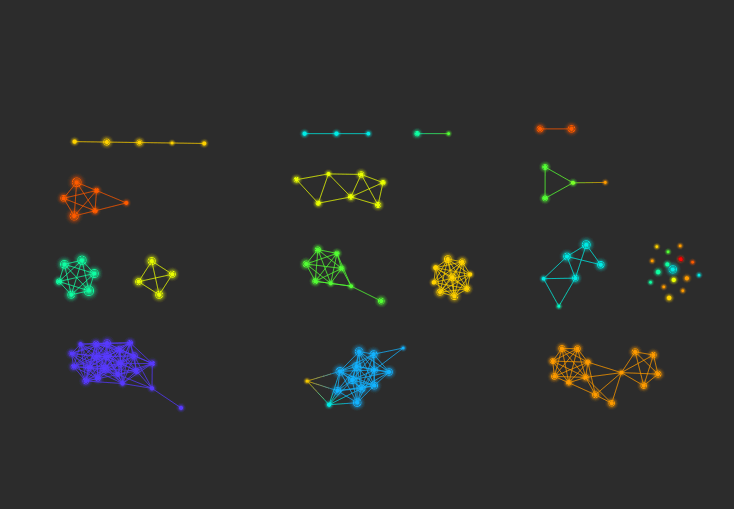

The data set is moderately complex, having 48 continuous features which appear to be unexplained measurements of signals in the electric current for the hard drives. The data also includes a classification column with 11 possible values that describe different conditions (failure modes, perhaps?) for disc drive components. One obvious thing to try is a Euclidean metric on the feature columns, and then to color the graph by classes. Since we don't know anything about the feature columns the first thing to try are the neighborhood lenses. The result is a featureless blob:

That’s disappointing.

Using some in-house debugging capabilities I looked at the scatterplot of the neighborhood lenses and I see why it's so bad - it looks like a Christmas tree:

There is apparently no localization of the classes in the Euclidean metric.

However, if you build a Random Forest on the data set, the classifier has a very small out-of-bag error, which is a strong indication for the classifier working very reliably.

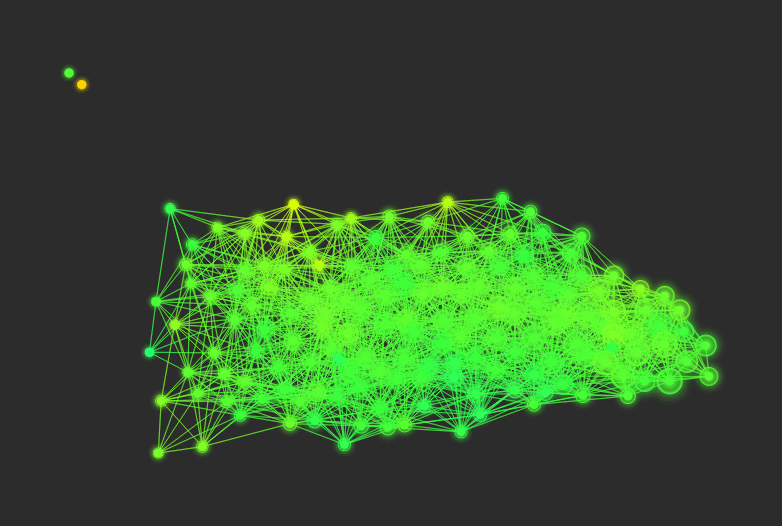

So I try making a graph using the Random Forest Hamming metric and the neighborhood lenses for that metric:

That looks very good. Just to confirm we also looked at the scatterplot of the neighborhood lenses and the results are what the figure above suggests:

What is obvious from both the graph and the scatterplot are that the Random Forest 'sees' strong structure below the level of the classification and is revealed by TDA. The reason is that RF doesn't efficiently use the "extraneous" data - but TDA does and benefits substantially from that information.

However, one might ask if that structure is illusory - perhaps an artifact of the algorithms we use somewhere in the system? In the case of this data set we can't really tell, since we don't know anything more about the groups.

Still, we have used the Random Forest metric on customer data to analyze possible failure modes for thousands of complex devices based on data collected during device burn-in. The classes were based on post-mortems done on devices returned to the manufacturer for various reasons (not all of which included failures).

What we found in this case was a Random Forest metric did a good job of classifying the failures, and the character of the pictures we got were similar to those above. More importantly, we discovered the distinct groups within a given failure mode sometimes had distinct causes.

The takeaway in both of these cases is that these causes would have been much more difficult to find without the further decomposition of the space that we got using TDA with the Random Forest.

The example we have just seen shows how TDA can work with a machine learning method to get better results than are possible by either technique individually.

This is what we mean when we say ML & TDA: Better Together.

雷达卡

雷达卡

提升卡

提升卡 置顶卡

置顶卡 沉默卡

沉默卡 变色卡

变色卡 抢沙发

抢沙发 千斤顶

千斤顶 显身卡

显身卡

京公网安备 11010802022788号

京公网安备 11010802022788号